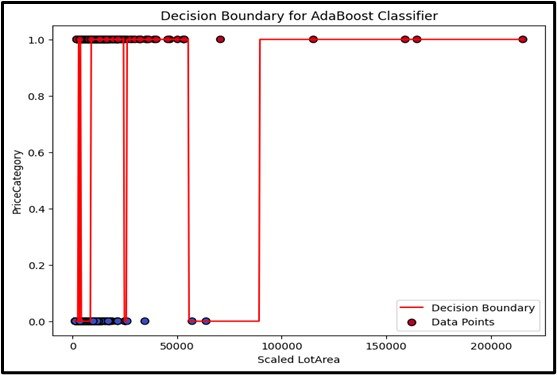

AdaBoost Classification

AdaBoost Classification is an ensemble learning method that combines multiple weak classifiers to form a strong predictive model. It iteratively adjusts the weights of weak classifiers to correct errors made by previous models, focusing more on misclassified data points in each iteration to improve overall accuracy. This adaptive process enhances the model’s performance by integrating the strengths of multiple classifiers.

- Concept: AdaBoost Classification (Adaptive Boosting) is an ensemble learning technique that combines several weak classifiers to create a robust predictive model.

- Ensemble of Weak Classifiers:Combines multiple simple models (weak classifiers) to form a stronger overall model.

- Adaptive Weighting:Adjusts the weights of misclassified instances and weak classifiers in each iteration to improve performance.

- Applications:AdaBoost Classification is widely used in:

- Image Recognition: Improving the accuracy of object detection and classification.

- Medical Diagnosis: Enhancing the prediction of disease presence or risk.

Enhancing Model

Purpose: Improve the classification accuracy by combining multiple weak classifiers into a strong classifier.

Input Data: Numerical or categorical variables (features).

Output: A categorical value (class label).

Assumptions

Assumes that the classification problem can be improved by combining multiple weak classifiers to correct the mistakes of previous classifiers iteratively.

Use Case

AdaBoost Classification is effective for improving the accuracy of weak models, especially in complex datasets with non-linear relationships. For example, classifying whether an email is spam or not based on features like word frequency and sender information.

Advantages

- It can significantly improve the accuracy of weak classifiers.

- Reduces bias and variance.

- It is good at avoiding overfitting if you use the right regularization.

Disadvantages

- It is sensitive to noisy data and outliers.

- Requires careful tuning of parameters.

- It might see less improvement with each additional round of boosting.

Steps to Implement:

- Import the necessary libraries for example `numpy`, `pandas`, and `sklearn`.

- Load and preprocess data: Load the dataset, handle missing values, and prepare features and target variables.

- Split the data: Use `train_test_split` to divide the data into training and testing sets.

- From `sklearn.ensemble`, import and create an instance of `AdaBoostClassifier`.

- Train the model: Use the `fit` method on the training data.

- Make predictions: Use the `predict` method on the test data.

- Evaluate the model: Check model performance using evaluation metrics like accuracy, precision, recall, F1 score, or the confusion matrix.

Ready to Explore?

Check Out My GitHub Code