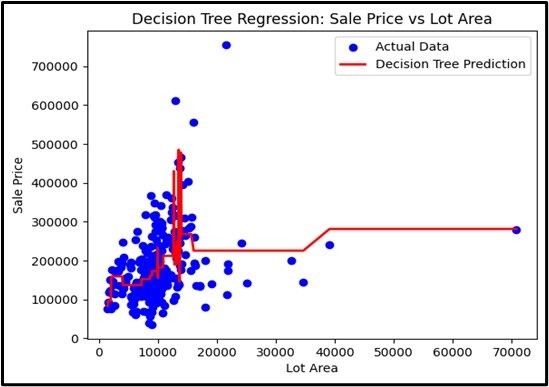

Decision Tree Regression

Decision Tree Regression is a powerful and widely used algorithm in the field of supervised learning, particularly when dealing with continuous outputs. It is a type of decision tree algorithm specifically tailored for regression tasks, where the goal is to predict a continuous value rather than a discrete class label.

- Concept: A non-linear regression model that predicts continuous outcomes by splitting data into subsets based on feature values, forming a tree structure.

- Tree Structure: Consists of root, internal nodes, and leaf nodes, where each leaf node gives the predicted value.

- Stopping Criteria: Includes maximum depth, minimum samples per leaf, and minimum reduction in error.

- Prediction: Follows the path from root to leaf to make a prediction based on the average value in the leaf node.

- Applications: Used in real estate, finance, and healthcare for predicting continuous variables.

Enhancing Model

Purpose: Classify the target variable into distinct categories by splitting the data into subsets based on the value of input features.

Input Data: Numerical or categorical variables (features).

Output: A categorical value (class label).

Assumptions

No specific assumptions about the data distribution.

Use Case

Decision Tree Classification is useful for tasks where the goal is to assign instances to predefined classes. For example, predicting whether a patient has a disease based on symptoms and medical history.

Advantages

- This is easy to understand and interpret.

- Handles both numerical and categorical data.

- Can handle missing values.

Disadvantages

- Prone to overfitting, especially with deep trees.

- It can be affected by small changes in the data.

- It can create biased results if some classes are much more common than others.

Steps to Implement:

- Import necessary libraries: Use `numpy`, `pandas`, and `sklearn`.

- Load and preprocess data: Load the dataset, handle missing values, and

- Import necessary libraries: Use `numpy`, `pandas`, and `sklearn`.

- Load and preprocess data: Load the dataset, handle missing values, and prepare features and target variables.

- Split the data: Use `train_test_split` to divide the data into training and testing sets.

- Import and instantiate DecisionTreeClassifier: From `sklearn.tree`, import and create an instance of `DecisionTreeClassifier`.

- Train the model: Use the `fit` method on the training data.

- Make predictions: Use the `predict` method on the test data.

- Evaluate the model: Check model performance using evaluation metrics like accuracy, precision, recall, F1 score, or the confusion matrix.

Ready to Explore?

Check Out My GitHub Code