Hierarchical Clustering

Hierarchical Clustering is an unsupervised learning algorithm used to group similar objects into clusters by building a hierarchy of clusters. The algorithm organizes the data either in a bottom-up approach (agglomerative clustering) where individual data points are progressively merged into larger clusters, or in a top-down approach.

- Concept:Hierarchical Clustering is an unsupervised machine learning algorithm used to group similar objects into clusters by creating a hierarchy of nested clusters.

- Agglomerative Clustering:Starts with individual data points and merges them into larger clusters based on similarity.

- Divisive Clustering:Begins with the entire dataset as one large cluster and recursively splits it into smaller, more specific clusters.

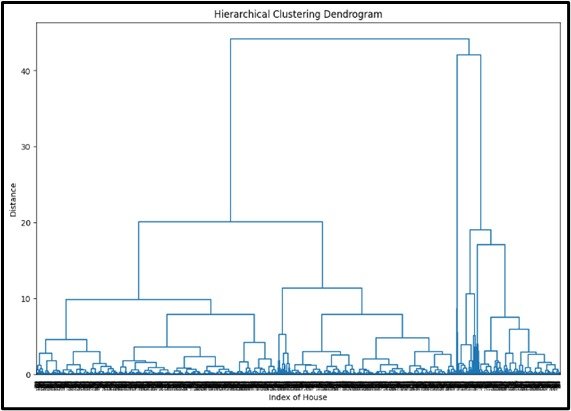

- Dendrogram:A tree-like diagram that shows the hierarchical relationships between clusters, allowing for easy visualization of how clusters are formed or divided.

- Applications:Hierarchical Clustering is often used in:

- Genomics: Classifying genes or proteins based on similarity.

- Market Research: Segmenting customers based on purchasing behavior or preferences.

Enhancing Model

Purpose:To group similar data points into clusters based on their similarity.

Input Data: Numerical or categorical variables (features), typically transformed into a distance matrix.

Output:A hierarchy or dendrogram representing the nested clusters.

Assumptions

No specific assumptions about the data distribution.

Use Case

Hierarchical Clustering is suitable for exploratory data analysis to discover the underlying structure of the data. For example, grouping customers based on purchasing behavior for market segmentation.

Advantages

- It does not require specifying the number of clusters.

- Produces a dendrogram, which is a visual representation of the cluster hierarchy.

- Suitable for small to medium-sized datasets.

Disadvantages

- Computationally intensive for large datasets.

- Sensitive to noise and outliers.

- It is difficult to identify the optimal number of clusters from the dendrogram.

Steps to Implement:

- Import 3 necessary libraries `numpy`, `pandas`, `scipy`, and `sklearn`.

- Load and preprocess data: Load the dataset, handle missing values, and prepare features for clustering.

- Compute distance matrix: Use `scipy.spatial.distance` or `sklearn.metrics.pairwise` to compute the distance matrix.

- Import and perform hierarchical clustering: From `scipy.cluster.hierarchy`, use methods like `linkage` to perform hierarchical clustering and `dendrogram` to visualize the results.

- Choose the number of clusters: Decide on the number of clusters based on dendrogram or other methods like the silhouette score.

- Cut the dendrogram: Use `fcluster` to cut the dendrogram and assign cluster labels.

- Evaluate the clustering: Assess clustering quality using metrics like the silhouette score or visual inspection of cluster assignments.

Ready to Explore?

Check Out My GitHub Code