K-Means Clustering

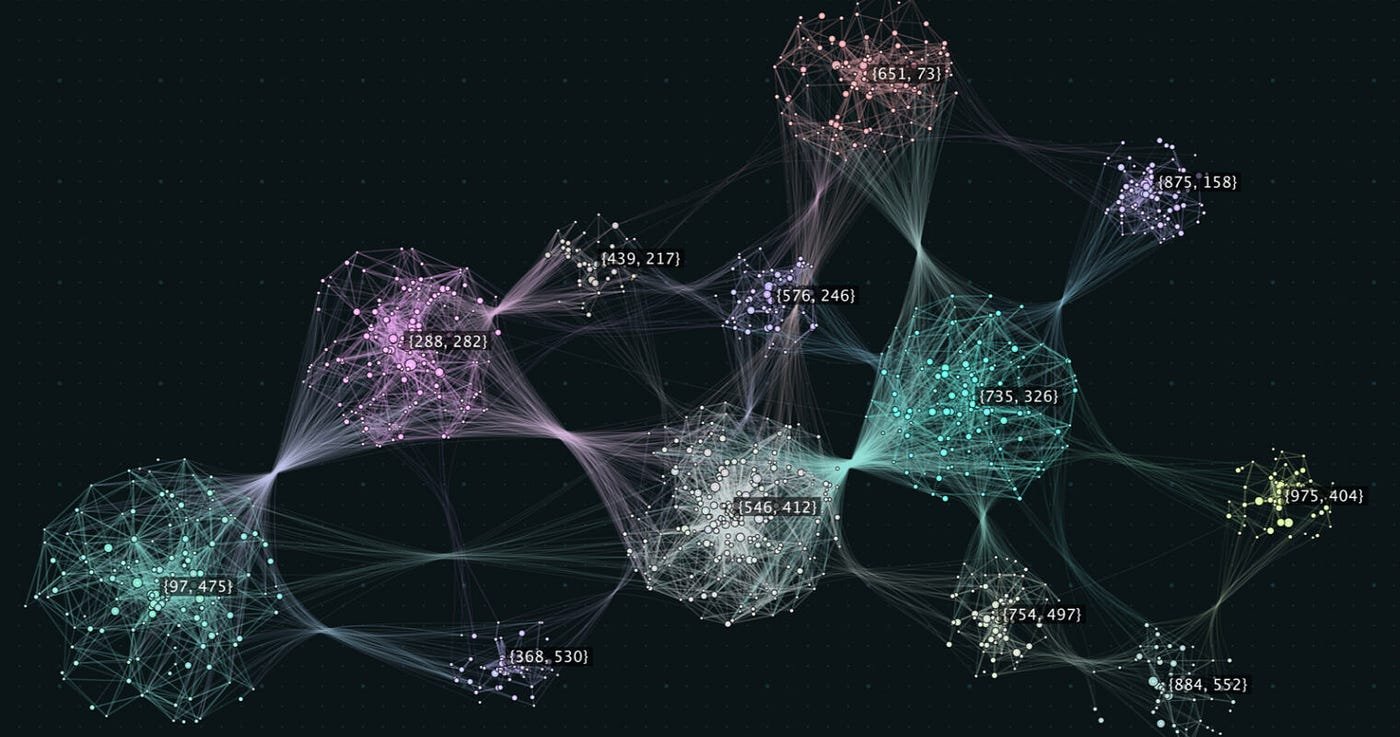

K-Means Clustering is an unsupervised machine learning algorithm that partitions data into K clusters based on feature similarity. The algorithm works by assigning each data point to the nearest cluster center (centroid) and then iteratively refining the cluster centers until they represent the optimal grouping of the data. K-Means is widely used for tasks like data segmentation, pattern recognition, and image compression.

- Concept:K-Means Clustering is an unsupervised machine learning algorithm used to group data into K clusters based on the similarity of their features.

- Cluster Centroids:The algorithm identifies K centroids, each representing the center of a cluster, and assigns data points to the nearest centroid.

- Iterative Refinement:K-Means repeatedly updates the positions of the centroids and reassigns data points to optimize the clustering.

- Applications:K-Means Clustering is commonly used in:

- Customer Segmentation: Grouping customers based on purchasing behavior.

- Image Segmentation: Dividing an image into regions based on color or intensity.

- Document Clustering: Organizing a collection of documents into topics based on content similarity.

Enhancing Model

Purpose:This ML algorithm groups similar data points together.

Input Data: Numerical variables.

Output: Cluster labels.

Assumptions

Assumes clusters are spherical and equally sized.

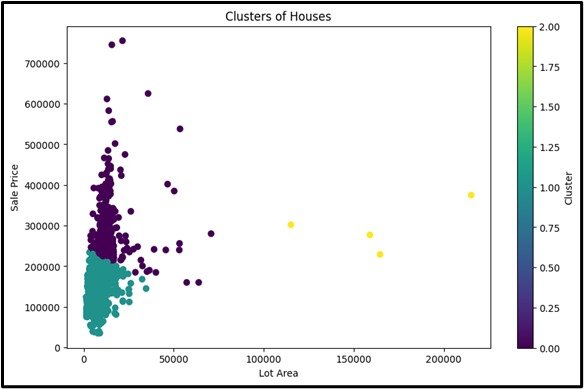

Use Case

K-means clustering can be useful when you need to identify natural groupings in your data. For example, segmenting customers into different groups based on their annual income and spending score.

Advantages

- Works well with spherical clusters and low-dimensional data.

- Scales well with large datasets.

- Provides a clear partition of data into clusters.

Disadvantages

- It assumes clusters are round and the same size.

- Sensitive to the initial choice of centroids.

- Requires the number of clusters (k) to be specified in advance.

Steps to Implement:

- Import necessary libraries i.e. Use `numpy`, `pandas`, and `sklearn`.

- Load and preprocess data: Load the dataset, handle missing values, and prepare features for clustering.

- Choose the number of clusters (k): Decide on the number of clusters based on domain knowledge or methods like the Elbow Method.

- Import and instantiate KMeans: From `sklearn.cluster`, import and create an instance of `KMeans` with the chosen number of clusters.

- Fit the model: Use the `fit` method on the data.

- Predict clusters: Use the `predict` method to assign cluster labels to data points.

- Evaluate the clustering: Assess the clustering quality using metrics like inertia, silhouette score, or visual inspection of cluster assignments.

Ready to Explore?

Check Out My GitHub Code