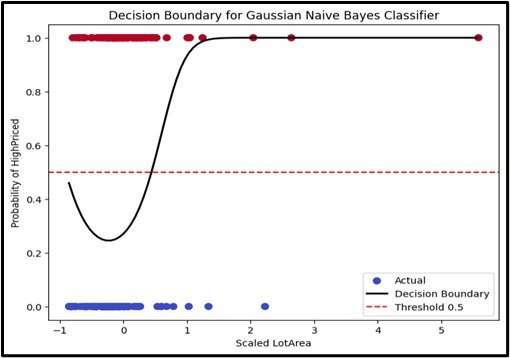

Naive Bayes Classification

Naive Bayes Classification is a probabilistic method used for classification tasks. It applies Bayes’ theorem, assuming that features are independent of each other given the class label. Despite its simplicity, this method often performs well on complex classification problems by efficiently estimating the probability of a data point belonging to a particular class.

- Concept:Naive Bayes Classification is a probabilistic algorithm used to classify data by applying Bayes’ theorem. It assumes that the features are independent of each other within each class.

- Probabilistic Approach:Uses Bayes’ theorem to calculate the probability of each class given the features.

- Independence Assumption: Assumes that features are independent of each other, which simplifies the model and computation.

- Applications:Naive Bayes Classification is commonly used in:

- Text Classification: Spam filtering, sentiment analysis.

- Medical Diagnosis: Predicting diseases based on symptoms.

Enhancing Model

Purpose: This classifies the target variable into distinct categories using probabilities calculated from the training data.

Input Data: Numerical or categorical variables (features).

Output: A categorical value (class label).

Assumptions

Assumes the data follows a certain distribution (basically it is a Gaussian, Multinomial, Bernoulli).

Use Case

Naive Bayes Classification can be useful for large datasets and real-time predictions. It works well for text classification tasks such as spam detection, sentiment analysis, and document categorization.

Advantages

- This is efficient in terms of both time and space.

- It works well with high-dimensional data.

- Performs well even with small amounts of training data.

Disadvantages

- Assumes independence between features.

- Can struggle with numerical.

- It is harder to understand how it works.

Steps to Implement:

- Firstly, import necessary libraries like Use `numpy`, `pandas`, and `sklearn`.

- Load and preprocess data: Load the dataset, handle missing values, and prepare features and target variables.

- Split the data: Use `train_test_split` to divide the data into training and testing sets.

- From `sklearn.naive_bayes`, import and create an instance of `GaussianNB`, `MultinomialNB`, or `BernoulliNB`, depending on the data.

- Train the model: Use the `fit` method on the training data.

- Make predictions: Use the `predict` method on the test data.

- Evaluate the model: Check model performance using evaluation metrics like accuracy, precision, recall, F1 score, or the confusion matrix.

Ready to Explore?

Check Out My GitHub Code