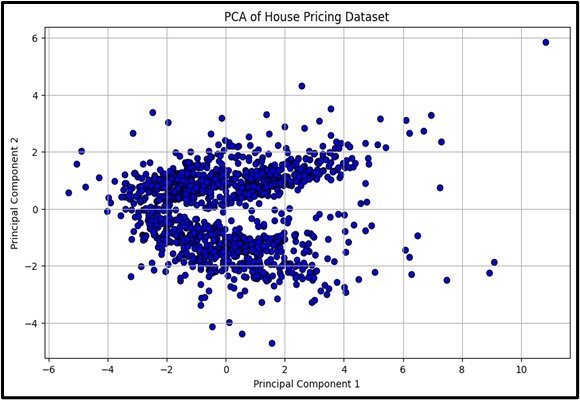

Principal Component Analysis

Principal Component Analysis is an unsupervised machine learning technique used to reduce the dimensionality of data. It transforms high-dimensional data into a lower-dimensional form by identifying and projecting onto a set of orthogonal components (principal components) that capture the most variance in the data. This reduction helps simplify the dataset while preserving its most critical features and relationships.

- Concept: Principal Component Analysis (PCA) is a dimensionality reduction technique used to transform high-dimensional data into a lower-dimensional form while preserving as much variance as possible.

- Dimensionality Reduction: PCA reduces the number of features in the dataset by projecting data onto a smaller set of orthogonal axes (principal components) that capture the most variance.

- Variance Preservation: The principal components are chosen to maximize the variance in the data, ensuring that the most significant features are retained.

- Applications:PCA is widely used in:

- Data Preprocessing: Reducing dimensionality before applying machine learning algorithms.

- Visualization: Simplifying data for visual exploration in lower dimensions.

Enhancing Model

Purpose: This reduces the number of features while preserving variance.

Input Data: Numerical variables.

Output: Reduced set of principal components.

Assumptions

Linear relationships between variables, large sample size.

Use Case

PCA can be used when you need to reduce dimensionality to improve model performance or for visualization purposes. For example, reducing the number of features in the handwritten digits dataset while retaining most of the variance for visualization or further analysis.

Advantages

- It reduces the number of features.

- Can improve model performance

- Reduce overfitting.

Disadvantages

- It can make the data harder to understand.

- Assumes linearity in data.

- May Not Handle Non-Linear Relationships Well.

Steps to Implement:

- Import necessary libraries: Use `numpy`, `pandas`, and `sklearn`.

- Load and preprocess data: Load the dataset, handle missing values, and prepare features for PCA.

- Standardize the data: Use `StandardScaler` from `sklearn.preprocessing` to standardize the features, as PCA is sensitive to the scale of the data.

- Import and instantiate PCA: From `sklearn.decomposition`, import and create an instance of `PCA`, specifying the number of principal components or leaving it to extract all components.

- Fit the PCA model: Use the `fit` method on the standardized data to compute the principal components.

- Transform the data: Use the `transform` method to project the data onto the principal components, reducing its dimensionality.

- Analyze the explained variance: Examine the `explained_variance_ratio_` attribute to understand how much variance is captured by each principal component.

Ready to Explore?

Check Out My GitHub Code