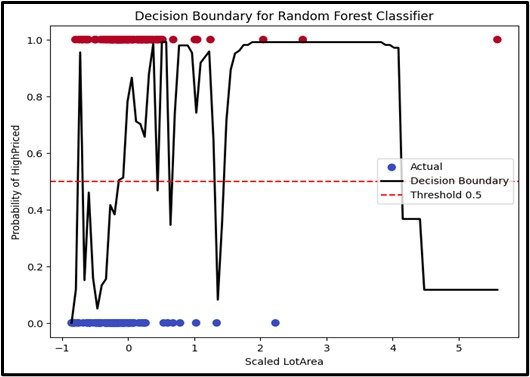

Random Forest Classification

Random Forest Classification is an ensemble learning method that combines multiple decision trees to classify data points. Each tree in the forest is trained on a random subset of the data and features, and the final classification is determined by aggregating the predictions of all the trees. This approach improves accuracy and robustness by reducing overfitting and increasing the model’s generalization ability.

- Concept:Random Forest Classification is an ensemble learning technique that constructs multiple decision trees to classify data points. Each tree is trained on a random subset of the data and features.

- Ensemble of Trees:Combines predictions from multiple decision trees to make a final classification.

- Random Sampling:Each tree is built using a different subset of the data and features, which helps to create diverse trees and improve model performance.

- Applications:Random Forest Classification is used in various fields, including:

- Finance: Fraud detection, credit scoring.

- Healthcare: Disease diagnosis, patient risk assessment.

Enhancing Model

Purpose: Basically it is a algorithm which is improves classification accuracy and reduces overfitting.

Input Data: Numerical and categorical variables.

Output: Class label.

Assumptions

No specific assumptions about the data distribution.

Use Case

Use Random Forest for classification when you need a robust as well as for accurate classification model. For example, classifying the quality of wine based on features like acidity, alcohol content, and sugar levels.

Advantages

- It handles both numerical and categorical data well.

- Reduces overfitting.

- It is robust to outliers.

Disadvantages

- Can be computationally expensive.

- It’s harder to understand than simpler models.

- Can create biased trees if some classes dominate.

Steps to Implement:

- Import the three libraries i.e. `numpy`, `pandas`, and `sklearn`.

- Load and preprocess data: Load the dataset, handle missing values, and prepare features and target variables.

- Split the data: Use `train_test_split` to divide the data into training and testing sets.

- From `sklearn.ensemble`, import and create an instance of `RandomForestClassifier`.

- Train the model: Use the `fit` method on the training data.

- Make predictions: Use the `predict` method on the test data.

- Evaluate the model: Check model performance using evaluation metrics like accuracy, precision, recall, F1 score, or the confusion matrix.

Ready to Explore?

Check Out My GitHub Code