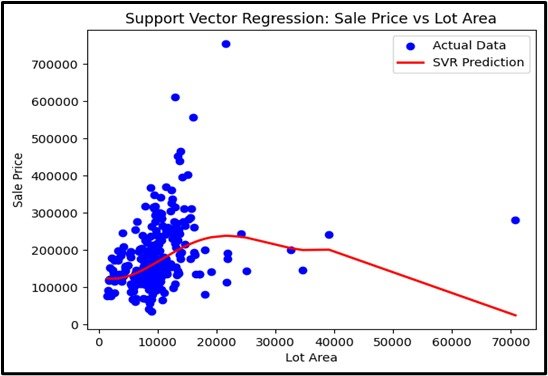

Support Vector Regression

Support Vector Machine is a supervised machine learning algorithm used for both classification and regression tasks. It works by finding the optimal hyperplane that best separates the data into different classes. The hyperplane is chosen to maximize the margin, which is the distance between the hyperplane and the nearest data points from each class, known as support vectors.

- Concept: A supervised learning algorithm for classification and regression that finds the optimal hyperplane to separate classes or predict values.

- Hyperplane: The decision boundary that separates classes.

- Support Vectors: Data points closest to the hyperplane.

- Kernel Functions: Transform data for non-linear separation.

- Margin: The distance between the hyperplane and the nearest support vectors. SVM aims to maximize this margin to improve classification accuracy and generalization.

- Applications: Used in text classification, image recognition, and complex regression tasks.

Enhancing Model

Purpose: Classify or regress data by maximizing the margin between classes.

Input Data: Numerical variables.

Output: The class label for or continuous value.

Assumptions

Data is linearly separable or can be transformed into a linearly separable space.

Use Case

Support Vector Machines can be used when you have complex, high-dimensional datasets. For example, it is for classifying handwritten digits based on pixel values.

Advantages

- It works well with data that has many features.

- Can use different kernel functions to handle non-linear data.

- Works well with both linear and non-linear data using kernel trick.

Disadvantages

- It requires a lot of computer power.

- Requires careful tuning of hyperparameters and kernel choice.

- Difficult to interpret the model, especially with non-linear kernels.

Steps to Implement:

- Import necessary libraries: Use `numpy`, `pandas`, and `sklearn`.

- Load and preprocess data: Load the dataset, handle missing values, and prepare features and target variables.

- Split the data: Use `train_test_split` to divide the data into training and testing sets.

- Import and instantiate SVC: From `sklearn.svm`, import and create an instance of `SVC` (Support Vector Classification).

- Train the model: Use the `fit` method on the training data.

- Make predictions: Use the `predict` method on the test data.

- Evaluate the model: Check model performance using evaluation metrics like accuracy, precision, recall, F1 score, or the confusion matrix.

Ready to Explore?

Check Out My GitHub Code