XGBoost Classification

XGBoost (Extreme Gradient Boosting) Classification is a gradient-boosting algorithm optimized for speed and performance. It constructs a series of decision trees sequentially, where each tree corrects the errors of its predecessor. XGBoost focuses on improving both accuracy and computational efficiency, making it highly effective for a wide range of classification tasks.

- Concept:XGBoost (Extreme Gradient Boosting) Classification is a powerful gradient-boosting algorithm that builds multiple decision trees in a sequential manner. Each tree aims to correct the errors made by the previous ones, enhancing both accuracy and computational efficiency.

- Sequential Tree Building:Constructs trees one after another, with each tree focusing on correcting errors from previous models.

- Optimization for Speed:Emphasizes both accuracy and computational efficiency, making it faster and more

- Applications:XGBoost Classification is used in various fields, including:

- Finance: Fraud detection, credit scoring.

- Healthcare: Disease diagnosis, patient risk prediction.

Enhancing Model

Purpose:This ML algorithm classifies the target variable into distinct categories using an ensemble of decision trees built sequentially.

Input Data: Numerical or categorical variables (features).

Output: A categorical value (class label).

Assumptions

That the classification problem can be solved by combining many weak decision trees into a strong classifie.

Use Case

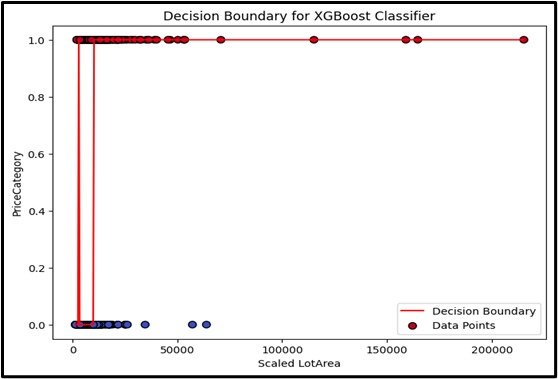

XGBoost Classification is good for big and complex datasets where you need high accuracy. For example, predicting if a customer will stop using a service based on their usage patterns, demographic data, and interactions with customer service.

Advantages

- It handles missing values and various data distributions .

- It supports regularization to reduce overfitting.

- It is Efficient with large datasets due to parallel processing and tree pruning.

Disadvantages

- It needs a lot of computational power to train on large.

- This classification technique can be complex to tune with many hyperparameters.

- Less interpretable.

Steps to Implement:

- Install `xgboost` if not already installed, and import `xgboost` along with `numpy`, `pandas`, and `sklearn`.

- Load and preprocess data: Load the dataset, handle missing values, and prepare features and target variables.

- Split the data: Use `train_test_split` to divide the data into training and testing sets.

- Import and instantiate XGBClassifier: From `xgboost`, import and create an instance of `XGBClassifier`.

- Train the model: Use the `fit` method on the training data.

- Make predictions: Use the `predict` method on the test data.

- Evaluate the model: Check model performance using evaluation metrics like accuracy, precision, recall, F1 score, or the confusion matrix.

Ready to Explore?

Check Out My GitHub Code