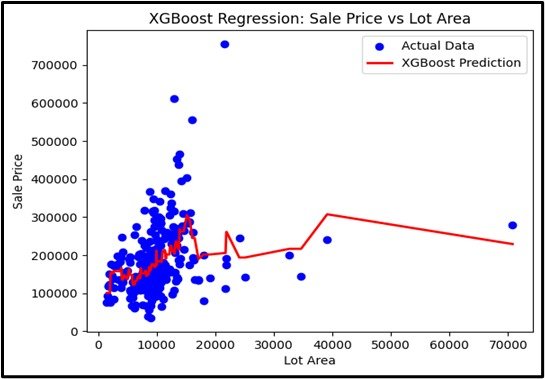

XGBoost Regression

XGBoost Regression is a machine learning algorithm that enhances the gradient boosting framework by making it faster, more flexible, and easier to use in a variety of applications. It is particularly effective for structured or tabular data and is widely used in data science competitions and industry due to its performance and scalability.

- Concept: XGBoost (Extreme Gradient Boosting) is an advanced ensemble learning method that combines multiple weak learners—typically simple models like decision trees—into a strong predictive model.

- Weak Learners:

XGBoost builds its predictive power by combining multiple weak learners. - Adaptive Weighting:

In XGBoost, each iteration assigns higher weights to instances that were predicted incorrectly by previous models. . - Applications:XGBoost is widely used in scenarios where accurate prediction of continuous variables is crucial.

Enhancing Model

Purpose: This is a algorithm provides accurate and fast predictions.

Input Data: Numerical and categorical variables.

Output: Continuous value.

Assumptions

No specific assumptions about the relationship between the predictors and response.

Use Case

Use XGBoost Regression when you need high performance and efficiency in prediction tasks. For example, predicting housing prices in California based on features like median income, house age, and number of bedrooms.

Advantages

- High predictive power.

- It is Fast and efficient.

- It handles missing values and outliers well.

Disadvantages

- May overfit if not properly regularized.

- Can be prone to overfitting if not properly regularized.

- Can be sensitive to noisy data.

Steps to Implement:

- Import libraries like `numpy`, `pandas`, and `sklearn`.

- Load and preprocess data: Load the dataset, handle missing values, and prepare features and target variables.

- Split the dataset: Use `train_test_split` to divide the data into training and testing sets.

- From `xgboost`, import and create an instance of `XGBRegressor`.

- Train the model: Use the `fit` method on the training data.

- Make predictions: Use the `predict` method on the test data.

- Evaluate the model: Check model performance using evaluation metrics like R-squared or MSE.

Ready to Explore?

Check Out My GitHub Code